The overarching goal of our research is to develop computational models to describe the functioning of the visual system at multiple levels, ranging from behavioral to neuronal. We work on building models that can explain a wide range of phenomena, including the effects of expectation, context, adaptation, and perceptual learning on visual processes. To do so, we conduct behavioral and neuroimaging experiments and build our models based on the results of those experiments. Some of the active projects are listed below.

Predictive Processing in Visual Perception

Sensory information we experience about the hidden states of the world is often incomplete, weak, ambiguous, or noisy. Thus, recognizing a stimulus based on only the sensory input may sometimes be very difficult. However, our visual system usually comes up with a useful interpretation of a visual scene pretty quickly because our prior knowledge facilitates perception while we make decisions. In this line of work, we investigate how prior knowledge and expectations affect early visual processes. We use psychophysics, computational modeling, and fMRI to unravel the effect of expectation on visual perception and the computational mechanisms underlying those effects. (This project was partly supported by funds from TUBITAK). Here are some relevant readings

Malik, A, Doerschner, K, Boyaci, H (2023). Unmet expectations about material properties delay perceptual decisions. Vision Research, 208. https://doi.org/10.1016/j.visres.2023.108223 (Also bioRxiv https://www.biorxiv.org/content/10.1101/2022.07.28.501825)

Urgen, BM, Boyaci, H. (2021). Unmet expectations delay sensory processes. Vision Research, 181, 1-9, https://doi.org/10.1016/j.visres.2020.12.004 (Also bioRxiv, https://doi.org/10.1101/545244)

Urgen, BM, Boyaci, H (2021). A recurrent cortical model can parsimoniously explain the effect of expectations on sensory processes. bioRxiv, https://doi.org/10.1101/2021.02.05.429913

Center-surround Interaction & Mechanisms of Neural Activity

Center-surround interaction, through which behavioral and neural responses to a stimulus are modulated by other stimuli presented in its surround, is a common working principle in the visual system. It is present at nearly all levels, from the retina to higher-level cortical areas. These interactions lead to suppression or facilitation of neural activity. There are, however, still many unanswered questions related to this mechanism. For example, how does the extent of spatial attention affect this center-surround interaction? In this line of research, we use a well-known motion perception effect and behavioral and fMRI methods to investigate the interaction of center and surround in the human visual system. This allows us to test computational models of neuronal interactions at early levels of the visual system. (This project was partly supported by funds from TUBITAK). For more about this research:

Kiniklioglu, M, Boyaci, H (2024). hMT+ Activity Predicts the Effect Of Spatial Attention On Surround Suppression. bioRxiv, 2024.10.10.617537. https://doi.org/10.1101/2024.10.10.617537

Kiniklioglu, M, Boyaci, H (2022). Increasing spatial extent of attention strengthens surround suppression. Vision Research, 199. https://doi.org/10.1016/j.visres.2022.108074 (Also bioRxiv

Er, G, Pamir, Z, Boyaci, H (2020). Distinct patterns of surround modulation in V1 and hMT+. NeuroImage, 220, 117084, https://doi.org/10.1016/j.neuroimage.2020.117084. (Also bioRxiv, https://doi.org/10.1101/817171)

Turkozer, HB, Pamir, Z, Boyaci, H (2016). Contrast Affects fMRI Activity in Middle Temporal Cortex Related to Center-Surround Interaction in Motion Perception. Frontiers in Psychology, 7:454, 1-8, http://journal.frontiersin.org/article/10.3389/fpsyg.2016.00454/full, doi: 10.3389/fpsyg.2016.00454

Effects of Adaptation & Mechanisms of Neural Activity

Prolonged exposure to a certain adapter stimulus affects the perceived features of a subsequently presented test stimulus. This phenomenon causes well-known effects such as repulsive size adaptation and the tilt aftereffect. For example, in the size adaptation effect, if the adapter is larger than the test, the test object is perceived as smaller than its veridical size. Conversely, when the adapter is smaller than the test, the test object is perceived as larger than its veridical size. In previous studies in the literature, the test stimulus has always been presented in the same location as the adapter stimulus. The spatial extent of these aftereffects has not been systematically studied, and what happened in the rest of the visual space was not known. In this line of research, contrary to the assumptions in the literature, we find that the effect of adaptation extends across the entire visual field. Further, we work on computational models to explain the local and non-local effects of adaptation using neural models. Here are some relevant readings

Boyaci, H (2024). Normalization with Dynamic Weights Predicts Neural and Behavioral Effects of Orientation Adaptation. MODVIS 2024: Computational and Mathematical Models in Vision, A satellite workshop to the 2024 Vision Sciences Society Annual Meeting. https://docs.lib.purdue.edu/cgi/viewcontent.cgi?article=1235&context=modvis

Gurbuz, T, Boyaci, H (2023). Tilt aftereffect spreads across the visual field. Vision Research, 205. https://doi.org/10.1016/j.visres.2022.108174 (Also bioRxiv, https://doi.org/10.1101/2022.06.21.496978)

Altan, E, Boyaci, H (2020). Size aftereffect is non-local. Vision Research, 176, pages 40-47, https://doi.org/10.1016/j.visres.2020.07.006. (Also bioRxiv, https://doi.org/10.1101/2020.03.19.998161)

Effect of Context on Perceived Visual Features

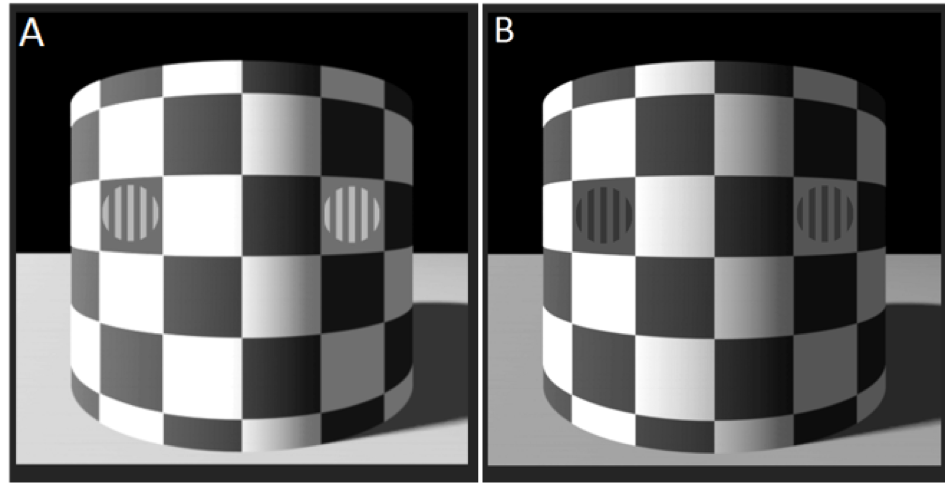

It is well known that context affects perceived attributes of objects, such as size, color, lightness (perceived relative reflectance of a surface), and motion. In this line of research, we conduct extensive studies to understand behavioral and neural mechanisms underlying these contextual effects. For example, in one study, we investigated whether the perceived contrast of a grating varies with the luminance or lightness of its background. Suppose that we superimpose grating patterns on patches with equal luminance but different lightness (see Figure: in each image, the squares with gratings are identical); what happens to the perceived contrast of those gratings? Is contrast perception affected by context-dependent lightness? In this series of studies, we seek answers to these questions using psychophysical and functional magnetic resonance imaging (fMRI) methods. (These projects were partly supported by funds from TUBITAK). Read more.

Here are some relevant readings

, H (2024). Neural correlates of dynamic lightness induction. Journal of Vision, Vol.24, 10. https://doi.org/10.1167/jov.24.9.10. (Also bioRxiv, https://www.biorxiv.org/content/10.1101/2023.07.28.550941v1)

Pamir, Z, Boyaci, H (2016). Context-dependent Lightness Affects Perceived Contrast. Vision Research, 124: 24-33. https://doi.org/10.1016/j.visres.2016.06.003.

Boyaci, H., Fang, F., Murray, S.O., and Kersten, D. (2007). Responses to lightness variations in early human visual cortex. Current Biology, 17: 989-993. http://dx.doi.org/10.1016/j.cub.2007.05.005.

Murray, S.O., Boyaci, H., and Kersten, D. (2006). The representation of perceived angular size in human primary visual cortex. Nature Neuroscience. 9: 429-434. http://dx.doi.org/10.1038/nn1641

Boyaci, H., Maloney, L. T., and Hersh, S. (2003). The effect of perceived surface orientation on perceived surface albedo in binocularly viewed scenes. Journal of Vision, 3: 541-553. http://dx.doi.org/10.1167/3.8.2

Perceptual Learning & Brain Plasticity

Extensive training on a certain visual feature can improve performance on various tasks related to that feature. For example, a person can become much better at detecting the direction of motion in a noisy and weak stimulus after long hours of training. This is called visual perceptual learning. This kind of learning is often specific to the trained stimulus, for example, its location and feature. In this project, we investigate the effect of training on a visual task and its neuronal correlates using magnetic resonance imaging. In the long run, we want to build models that can account both for short-term (i.e., adaptation) and long-term (i.e., learning) effects of prolonged exposure to stimuli and tasks. (This project was partly supported by funds from TUBITAK).